Are AI scraper bots stealing your bandwidth and copyrighted materials?

Date published: 25 November 2024 Updated: 28 November 2024

We recently encountered a concerning trend after one of our client’s website performance dramatically declined in October due to unexpected, persistent calls to its database. The website in question is a large site with thousands of product image galleries in its database. After a thorough investigation, we managed to trace the issue back to an aggressive bot, ByteSpider, developed by ByteDance, the Chinese company that owns TikTok. This experience sheds light on the challenge website owners face with so-called ‘scraper’ AI bots and the crucial difference between these AI bots and legitimate AI-driven search engine tools.

What are scraper AI bots, and how do they differ from other bots?

Scraper bots are automated tools designed to ‘scrape’ websites and extract data like images and text to train their AI models, copying your website data for free and adding it to their knowledge base. Scraper bots differ from the familiar web crawlers used by major search engines like Google’s Googlebot, which helps index your site to make it more discoverable. Scraper AI bots are more invasive – they can strain your server resources, hinder site performance, and potentially use your site’s data without consent.

A recent Fortune article reported that ByteSpider was the most aggressive scraper on the internet. In our experience with our client’s website, the ByteSpider bot caused repeated, fast-paced data calls that led to high server loads and significant slowdowns, disrupting the website’s functioning during peak traffic. Once we identified ByteSpider as the source, we promptly blocked it. The result? The site’s performance metrics improved instantly and returned to normal.

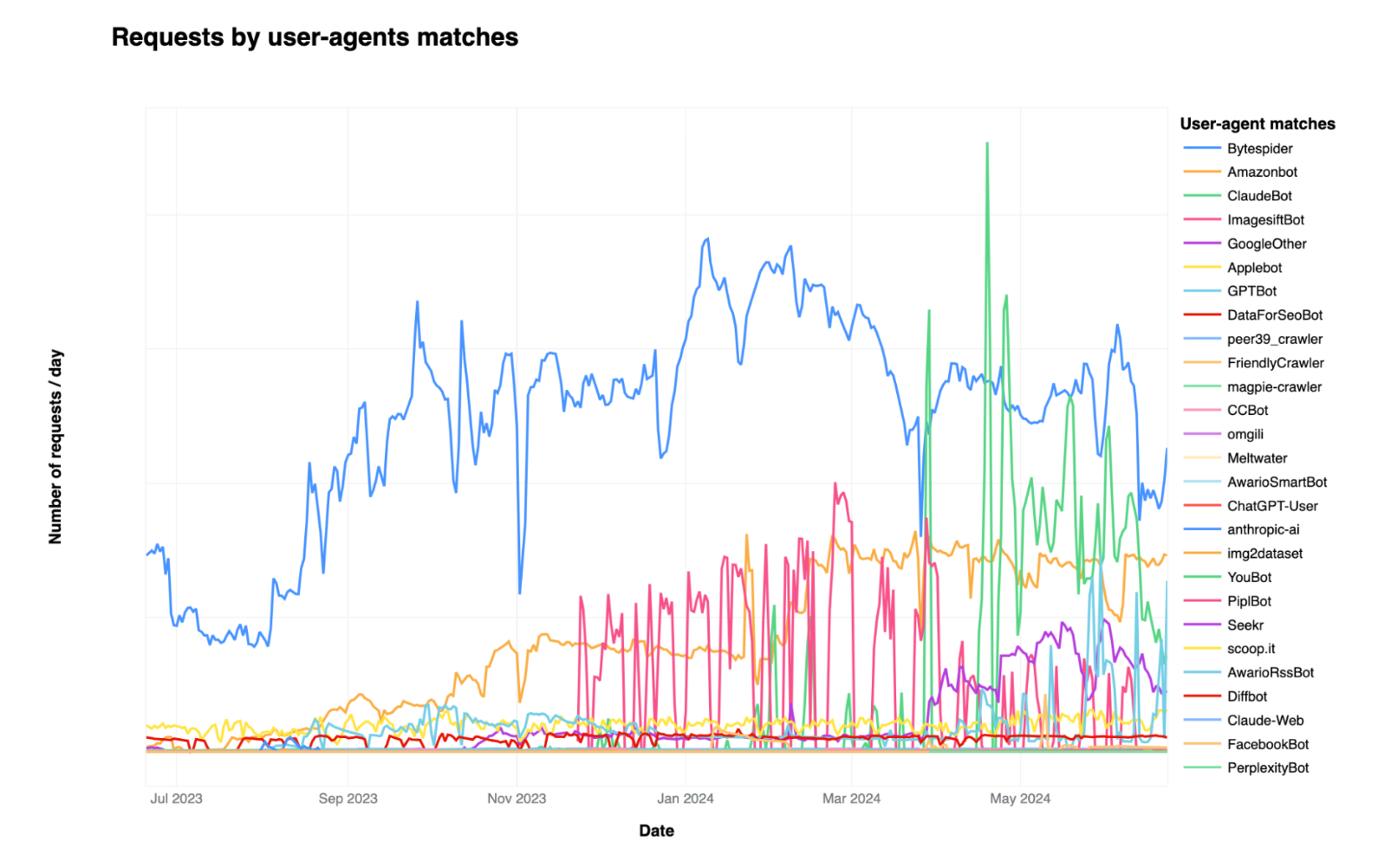

The issue with aggressive AI bots like ByteSpider is that they ignore robots.txt. The robots.txt is a text file that website owners can add to the code of their website to individually instruct bots which pages they can and can’t access. Similarly, blocking the bot’s IP is impossible since it changes constantly. The solution? We relied on Cloudflare to block the nasty AI crawlers, underlining the importance of the additional layer of protection that security applications like this provide. The graph below shows increased activity of bots like ByteSpider over time.

Source: Cloudflare

We have since begun to implement this as standard across all the websites we look after. Has your website performance taken a nosedive recently for no apparent reason? These AI bots could be the culprit. If you would like advice on how to deal with this, please get in touch.

Copyright and intellectual property are at risk

Beyond technical performance, these scraper bots have significant implications for businesses – especially those in the creative industries. One of our clients, Uni Europa, recently held a conference on AI use among creative and cultural workers, highlighting growing concerns about AI’s potential misuse.

This Uni Global news article underscores concerns around generative AI models that source vast amounts of online content, infringing on copyright and exploiting creative works. While traditional AI crawlers serve a legitimate purpose in indexing and refining search capabilities, the more intrusive scraper AI bots can undermine the rights and efforts of content creators.

What AI scrapers do with all the data they mine from websites remains to be seen. In the case of ByteSpider, it could power the newly launched AI-powered features on TikTok, such as AI-generated ads and avatars, together with its search engine. Given its aggressive consumption of data, the US government is attempting to limit access to user data to the Chinese government, with President Biden signing a bill to ban TikTok in the US unless it was sold by Bytedance within the year – with some commentators like Mashable attributing this to the rapid acceleration in the activity of the bot.

The difference between search-friendly crawlers and scraper AI bots lies in their intent. Search engine bots help enhance your content’s visibility; scraper bots often harvest data for use in generative AI models without consent, using original work without proper attribution or compensation.

Should you block these bots?

Website owners and managers must assess whether blocking specific AI bots is necessary to protect their content and maintain site performance. While blocking all bots could impact your site’s discoverability, selectively restricting aggressive bots like ByteSpider can prevent unnecessary data mining and protect your intellectual property.

Practical steps to protect your website from AI scraper bots

- Monitor your traffic: Use tools like Google Search Console to regularly monitor website performance

- Monitor server logs: look for high volumes of requests from unknown sources or recognisable user agents that could indicate the presence of scraper bots.

- Use advanced security tools: Platforms like Cloudflare offer solutions to manage and block unwanted bots and protect your data.

As AI continues to evolve, staying informed and proactive is essential. Website owners must understand which bots can benefit their site and which could be detrimental. Balancing discoverability with security and data protection is key.